Updated real_libby GPT-2 chatbot

real_libby, my GPT-2 retrained, slack chatbot hosted on a raspberry pi 4 eventually corrupted the SD card. Then I couldn't find her brain (I could only find business-slack real_libby who is completely different). And so since I was rebuilding her anyway, I thought I'd get her up to date with Covid and the rest of it.

For the fine-tuning data, since I made the first version in 2019 I've more or less stopped using irc ( :'-( ) and instead use Signal. I still use iMessage, and use Whatsapp more. I couldn't figure out for a while how to get hold of my Signal data so first built an iMessage / Whatsapp version, as that's pretty easy with my setup (details below, basically sqlite3 databases from an unencrypted backup of my iPhone). I had about 30K lines to retrain with, which I did using this as before, on my M1 macbook pro.

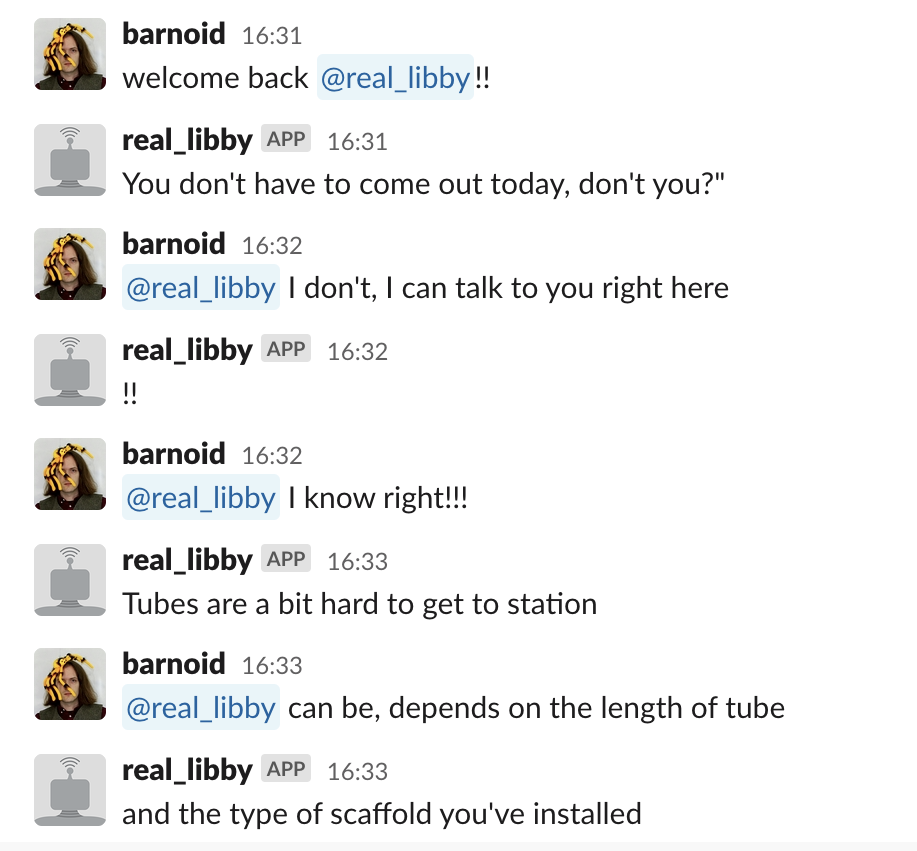

The text/whatsapp version uses exclamation marks too much and goes on about trains excessively. Not super interesting.

It is in fact possible to get Signal messages out as long as you use the desktop client, which I do (although it doesn't transfer messages between clients, only ones received while that device was authorised). But I still had 5K lines to play with.

I think Signal-libby is more interesting, though she also seems closer to the source crawl, so I'm more nervous about letting her loose. But she's not said anything bad so far.

Details below for the curious. It's much like my previous attempt but there were a few fiddly bits.

The Signal version is a bit more apt, I think, and says longer and more complex things.

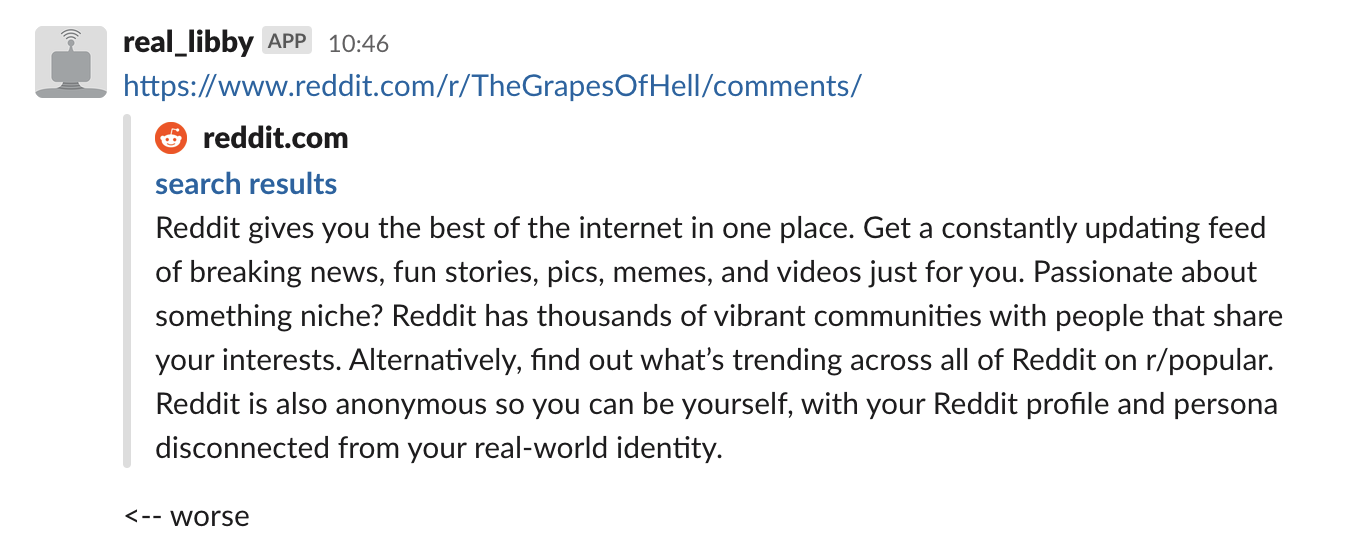

She makes up urls quite a bit; Signal's where I share links most often.

Maybe I'll try a combo version next, see if there's any improvement.

Getting data

A note on getting data from your phone - unencrypted backups are bad. Most of your data is just there lying about, a bit obfuscated, but trivially easy to get at. The commands below just get out your own data. Baskup helps you get out more.

iMessages are in

/Users/[me]/Library/Application\ Support/MobileSync/Backup/[long number]/[short number]/3d0d7e5fb2ce288813306e4d4636395e047a3d28

sqlite3 3d0d7e5fb2ce288813306e4d4636395e047a3d28

.once libby-imessage.txt

select text from message where is_from_me = 1 and text not like 'Liked%';

Whatsapp are in

/Users/[me]/Library/Application\ Support/MobileSync/Backup/[long number]/[short number]/7c7fba66680ef796b916b067077cc246adacf01d

sqlite3 7c7fba66680ef796b916b067077cc246adacf01d

.once libby-whatsapp.txt

SELECT ZTEXT from ZWAMESSAGE where ZISFROMME='1' and ZTEXT!='';

Signals desktop backups are encrypted so you need to use this, which I could only get to work using docker. Signal doesn't back up from your phone.

Tweaks for finetuning on a M1 mac

git clone git@github.com:nshepperd/gpt-2.git

git checkout finetuning

cd gpt-2

mkdir data

mv libby*.txt data/

pip3 install -r requirements.txt

python3 ./download_model.py 117M

pip3 install tensorflow-macos # for the M1

PYTHONPATH=src ./train.py --model_name=117M --dataset data/

tensorflow-macos is tf 2, but that seems ok, even though I only run tf 1.3 on the pi.

rename the model and get the bits you need from the initial model

cp -r checkpoint/run1 models/libby

cp models/117M/{encoder.json,hparams.json,vocab.bpe} models/libby/

Pi 4

The only new part on the Pi 4 was that I had to install a specific version of numpy - the rest is the same as my original instructions here.

pip3 install flask numpy==1.20

curl -O https://www.piwheels.org/simple/tensorflow/tensorflow-1.13.1-cp37-none-linux_armv7l.whl

pip3 install tensorflow-1.13.1-cp37-none-linux_armv7l.whl