Notes on a TV-to-Radio prototype

In the last couple of weeks at work we've been making "radios" in order to test the Radiodan prototyping approach. We each took an idea and tried to do a partial implementation. Here's some notes about mine. It's not really a radio.

Idea

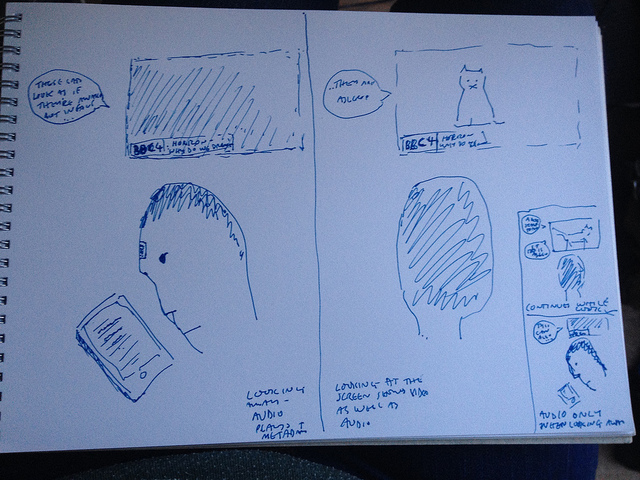

Make a TV that only shows the screen when someone is looking, else just play sound.

This is a response to the seemingly-pervasive idea that the only direction for TV is for devices and broadcasts (and catchup) to get more and more pixels. Evidence suggests that sometimes at least, TV is becoming radio with a large screen that is barely - but still sometimes - paid attention to.

There are a number of interesting things to think about once you see TV and radio as part of a continuum (like @fantasticlife does). One has to do with attention availability, and specifically the usecases that overlap with bandwidth availability.

As I watch something on TV and then move to my commuting device, why can't it switch to audio only or text as bandwidth or attention allows? If it's important for me to continue then why not let me continue in any way that's possible or convenient? My original idea was to do a demo showing that as bandwidth falls, you get less and less resolution for the video, then audio (using the TV audio description track?) then text (then a series of vibrations or lights conveying mood?). There are obvious accessibility applications for this idea.

Then pushing it further, and to make a more interesting demo - much TV is consumed while doing something else. Most of the time you don't need the pictures, only when you are looking. So can we solve that? Would it be bad if we did? what would it be like?

SO: my goal is a startling demo of mixed format content delivery according to immediate user needs as judged by attention paid to the screen.

I'd also like to make some sillier variants:

- Make a radio that is only on when someone is looking.

- Make a tv that only shows the screen when someone is not looking.

I got part of the way - I can blank a screen when someone is facing it (or the reverse). Some basic code is here. Notes on installing it are below. It's a slight update to this incredibly useful and detailed guide. The differences are that the face recognition code is now part of openCV, which makes it easier, and I'm not interested in whose face it is, only that there is one (or more than one), which simplifies things a bit.

I got as far as blanking the screen when it detects a face. I haven't yet got it to work with video, I think it has potential though.

Implementation notes

My preferred platform is the Raspberry Pi, because then I can leave it places for demos, make several cheaply etc. Though in this case using the Pi caused some problems.

Basically I need

1. something to do face detection

2. something to blank the screen

3. something to play video

1. Face detection turned out to be the reasonably easy part.

A number of people have tried using opencv for face detection on the PI and got it working, either with C++ or using Python. For my purposes, Python was much too slow - about 10 secs to process the video - but C++ was sub-second, which is good enough I think. Details are below.

2. Screen blanking turned out to be much more difficult, especially when combined with C++ and my lack of experience of C++

My initial thought was to do the whole thing in html, e.g. with a full screen browser and just hiding the video element when the face was not present. I'd done tests with Epiphany and knew it could manage html5 video (mp4 streaming). However on my Pi Epiphany point-blank refuses to run - segfaulting every time - and I've not worked out what's wrong (could be that I started with a non-clean Pi img, I've not tested it on a clean one yet). Also Epiphany can't run in kiosk mode, which would probably mean the experience wouldn't be great. So I looked into lower-level commands that should work to blank the screen whatever is playing.

First (thanks to Andrew's useful notes) I started looking at CEC, HDMI-level control inteface that allows you do to things like switch on a TV. Raspberry Pi uses this, also DVD plays and the like. As far as I can tell however, there are no CEC commands to blank the screen (although you can switch the TV off, which isn't what I want as it's too slow).

There's also something called DPMS, which allows you to put a screen in hibernation and other modes for HDMI and DVI too, I think. On linux systems you can set it using xset; however, it only seems to work once. I lost a lot of time on this. DPMS is pretty messy and hard to debug. After much strugging, and thinking it was my C++ / system calls that were the problem, replacing them with an X library and finally making shell scripts and small C+ testcases, I figured it was just a bug.

Just for added fun, there's no vbetool on the Pi, which would have been another method;

and TVService is too heavyweight.

I finally got it to work with xset s, which makes a (blank?) screensaver come on, and is near-instant, though that means I have to run X.

xset s activate #screen off

xset s reset #screen on

3. Something to play video

This is as far as I've got: I need to do a clean install and try Epiphany again, and also work out why OMXPlayer seems to cancel the screensaver somehow.

--------------------------------------------------------

Links

Installing opencv on a pi

- https://thinkrpi.wordpress.com/2013/05/22/opencv-and-camera-board-csi/

Face recognition and opencv

- http://docs.opencv.org/trunk/modules/contrib/doc/facerec/tutorial/facerec_video_recognition.html

- http://docs.opencv.org/modules/contrib/doc/facerec/facerec_tutorial.html

Python camera / face detection

- https://github.com/Self-Driving-Vehicle/rc-car-controller

- https://github.com/shantnu/Webcam-Face-Detect

- https://rdmilligan.wordpress.com/2014/03/16/face-detection-on-raspberry-pi/

- https://realpython.com/blog/python/face-detection-in-python-using-a-webcam/

CEC

- http://andrewnicolaou.co.uk/posts/control-tv-cec

- https://github.com/Pulse-Eight/libcec/blob/master/include/cec.h

- http://blog.endpoint.com/2012/11/using-cec-client-to-control-hdmi-devices.html

- http://www.cec-o-matic.com

- http://www.raspberrypi.org/forums/viewtopic.php?f=29&t=53481

Best guide on power save / screen blanking

- http://www.shallowsky.com/linux/x-screen-blanking.html

DPMS example code

- http://www.karlrunge.com/x11vnc/blockdpy.c

- https://github.com/danfuzz/xscreensaver/blob/master/driver/dpms.c

xset

- http://www.x.org/archive/X11R7.6/doc/man/man1/xset.1.xhtml

C++

- linking X11 libs: http://stackoverflow.com/questions/17405722/linker-dont-use-library-but-i-asked-to-do-this

- https://gcc.gnu.org/ml/gcc-help/2003-11/msg00107.html

- compiling http://stackoverflow.com/questions/14919366/how-to-compile-library-on-c-using-gcc

- system calls and similar http://stackoverflow.com/questions/5687928/execv-quirks-concerning-arguments and http://www.cplusplus.com/forum/unices/2047/

xscreensaver

- http://www.jwz.org/xscreensaver/man3.html

vbetool

- https://bbs.archlinux.org/viewtopic.php?id=66169

- http://www.raspberrypi.org/forums/viewtopic.php?f=66&t=12261&start=125

tvservice

- http://www.raspberrypi.org/forums/viewtopic.php?t=13801

- http://www.raspberrypi.org/forums/viewtopic.php?f=67&t=25933

Samsung tv hacking

- http://blog.endpoint.com/2012/11/using-cec-client-to-control-hdmi-devices.html

Run browser on startup

- http://www.raspberry-projects.com/pi/pi-operating-systems/raspbian/gui/auto-run-browser-on-startup

Notes on installing and running opencv on a pi with video

1. sudo raspi-config

expand file system

enable camera

reboot

2. sudo apt-get update && sudo apt-get -y upgrade

3. cd /opt/vc

sudo git clone https://github.com/raspberrypi/userland.git

sudo apt-get install cmake

cd /opt/vc/userland

sudo chown -R pi:pi .

sed -i 's/DEFINED CMAKE_TOOLCHAIN_FILE/NOT DEFINED CMAKE_TOOLCHAIN_FILE/g' makefiles/cmake/arm-linux.cmake

sudo mkdir build

cd build

sudo cmake -DCMAKE_BUILD_TYPE=Release ..

sudo make

sudo make install

Go to /opt/vc/bin and test one file typing : ./raspistill -t 3000

4. install opencv

sudo apt-get install libopencv-dev python-opencv

cd

mkdir camcv

cd camcv

cp -r /opt/vc/userland/host_applications/linux/apps/raspicam/* .

curl https://gist.githubusercontent.com/libbymiller/13f1cd841c5b1b3b7b41/raw/b028cb2350f216b8be7442a30d6ee7ce3dc54da5/gistfile1.txt > camcv_vid3.cpp

edit CMakeLists.txt:

[[

cmake_minimum_required(VERSION 2.8)

project( camcv_vid3 )

SET(COMPILE_DEFINITIONS -Werror)

#OPENCV

find_package( OpenCV REQUIRED )

include_directories(/opt/vc/userland/host_applications/linux/libs/bcm_host/include)

include_directories(/opt/vc/userland/host_applications/linux/apps/raspicam/gl_scenes)

include_directories(/opt/vc/userland/interface/vcos)

include_directories(/opt/vc/userland)

include_directories(/opt/vc/userland/interface/vcos/pthreads)

include_directories(/opt/vc/userland/interface/vmcs_host/linux)

include_directories(/opt/vc/userland/interface/khronos/include)

include_directories(/opt/vc/userland/interface/khronos/common)

include_directories(./gl_scenes)

include_directories(.)

add_executable(camcv_vid3 RaspiCamControl.c RaspiCLI.c RaspiPreview.c camcv_vid3.cpp

RaspiTex.c RaspiTexUtil.c

gl_scenes/teapot.c gl_scenes/models.c

gl_scenes/square.c gl_scenes/mirror.c gl_scenes/yuv.c gl_scenes/sobel.c tga.c)

target_link_libraries(camcv_vid3 /opt/vc/lib/libmmal_core.so

/opt/vc/lib/libmmal_util.so

/opt/vc/lib/libmmal_vc_client.so

/opt/vc/lib/libvcos.so

/opt/vc/lib/libbcm_host.so

/opt/vc/lib/libGLESv2.so

/opt/vc/lib/libEGL.so

${OpenCV_LIBS}

pthread

-lm)

]]

cmake .

make

./camcv_vid3.cpp